Research

The primary focus of my research is on developing robots that can adapt to and coexist with humans in our dynamic world. This involves creating systems capable of operating in constantly changing, unstructured environments with limitless variations in the appearance and positioning of objects. The robots must be able to adapt to changes, learn from experiences and human interactions, and do so with minimal data. My research centers on data-efficient learning from multi-sensory inputs to endow robots with the dexterity and advanced reasoning needed to perform complex tasks autonomously. I am particularly interested in applications of touch sensing in robotic manipulation, e.g. object perception, success prediction and replanning. Below are some research directions and selected relevant papers.

Object modeling and scene understanding

-

Implicit surface learning is widely used for 3D surface reconstruction from raw point cloud data. Current approaches employ deep neural networks or Gaussian process models with the trade-offs across computational performance, object fidelity, and generalization capabilities. We propose a novel Gaussian process regression based method to build implicit surfaces for 3D surface reconstruction, which leads to better accuracy. Our approach encodes local and global shape information from the data to maintain the correct topology of the underlying shape. The proposed pipeline works on dense, sparse, and noisy raw point clouds and can be parallelized to improve computational efficiency. We evaluate our approach on synthetic and real point cloud datasets built using visual and tactile sensors. Results show that our approach leads to high accuracy compared to baselines.

-

Object shape information is an important parameter in robot grasping tasks. However, obtaining accurate models of novel objects may be difficult due to incomplete and noisy sensory measurements. In addition, object shape may change due to frequent interaction with the object (cereal boxes, etc). In this paper, we present a probabilistic approach for learning object models based on visual and tactile perception through physical interaction with an object. Our robot explores unknown objects by touching them strategically at parts that are uncertain in terms of shape. The robot starts by using only visual features to form an initial hypothesis about the object shape, then gradually adds tactile measurements to refine the object model. Our experiments involve ten objects of varying shapes and sizes in a real setup. The results show that our method is capable of choosing a small number of touches to construct object models similar to real object shapes and to determine similarities among acquired models.

-

We present a multi-finger sliding touch strategy for efficient shape exploration using a Bayesian Optimization approach and a single-leader-multi-follower strategy for multi-finger smooth local surface perception. We evaluate our proposed method by estimating the 3D shape of objects from the YCB and OCRTOC datasets based on simulation and real robot experiments. The proposed approach yields successful reconstruction results relying on only a few continuous sliding touches.

-

We present a probabilistic learning framework to form object hypotheses through interaction with the environment. A robot learns how to manipulate objects through pushing actions to identify how many objects are present in the scene. We use a segmentation system that initializes object hypotheses based on RGBD data and adopt a reinforcement approach to learn the relations between pushing actions and their effects on object segmentations. Trained models are used to generate actions that result in minimum number of pushes on object groups, until either object separation events are observed or it is ensured that there is only one object acted on. We provide baseline experiments that show that a policy based on reinforcement learning for action selection results in fewer pushes.

Click on each title to expand the content.

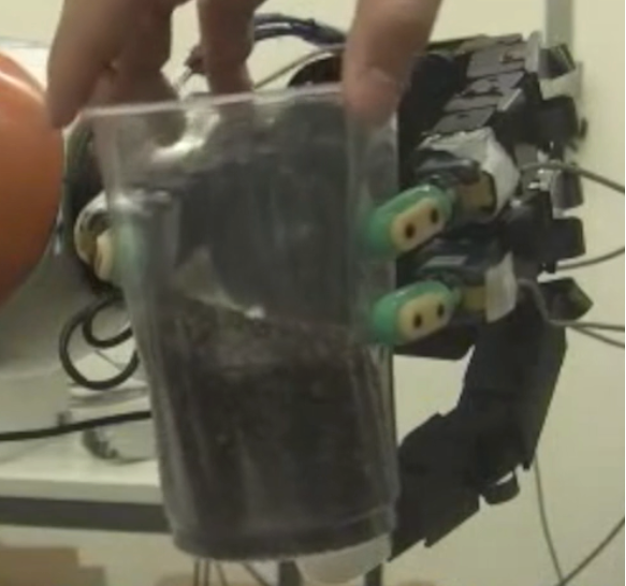

Multimodal (tactile, visual, and proprioceptive) sensing for grasping and manipulation

-

Predicting grasp success is useful for avoiding failures in many robotic applications. Based on reasoning in wrench space, we address the question of how well analytic grasp success prediction works if tactile feedback is incorporated. Tactile information can alleviate contact placement uncertainties and facilitates contact modeling. We introduce a wrench-based classifier and evaluate it on a large set of real grasps. The key finding of this work is that exploiting tactile information allows wrench-based reasoning to perform on a level with existing methods based on learning or simulation. Different from these methods, the suggested approach has no need for training data, requires little modeling effort and is computationally efficient. Furthermore, our method affords task generalization by considering the capabilities of the grasping device and expected disturbance forces/moments in a physically meaningful way.

-

We present a probabilistic framework for grasp modeling and stability assessment. The framework facilitates assessment of grasp success in a goal-oriented way, taking into account both geometric constraints for task affordances and stability requirements specific for a task. We integrate high-level task information introduced by a teacher in a supervised setting with low-level stability requirements acquired through a robot's self-exploration. The conditional relations between tasks and multiple sensory streams (vision, proprioception and tactile) are modeled using Bayesian networks. The generative modeling approach both allows prediction of grasp success, and provides insights into dependencies between variables and features relevant for object grasping.

-

An important ability of a robot that interacts with the environment and manipulates objects is to deal with the uncertainty in sensory data. Sensory information is necessary to, for example, perform an online assessment of grasp stability. We present methods to assess grasp stability based on haptic data and machine-learning methods, including AdaBoost, support vector machines (SVMs), and hidden Markov models (HMMs). In particular, we study the effect of different sensory streams to grasp stability. This includes object information such as shape; grasp information such as approach vector; tactile measurements from fingertips; and joint configuration of the hand. Sensory knowledge affects the success of the grasping process both in the planning stage (before a grasp is executed) and during the execution of the grasp (closed-loop online control). In this paper, we study both of these aspects. We propose a probabilistic learning framework to assess grasp stability and demonstrate that knowledge about grasp stability can be inferred using information from tactile sensors. Experiments on both simulated and real data are shown. The results indicate that the idea to exploit the learning approach is applicable in realistic scenarios, which opens a number of interesting venues for the future research.An important ability of a robot that interacts with the environment and manipulates objects is to deal with the uncertainty in sensory data. Sensory information is necessary to, for example, perform online assessment of grasp stability. We present methods to assess grasp stability based on haptic data and machine-learning methods, including AdaBoost, support vector machines (SVMs), and hidden Markov models (HMMs). In particular, we study the effect of different sensory streams to grasp stability. This includes object information such as shape; grasp information such as approach vector; tactile measurements from fingertips; and joint configuration of the hand. Sensory knowledge affects the success of the grasping process both in the planning stage (before a grasp is executed) and during the execution of the grasp (closed-loop online control). In this paper, we study both of these aspects. We propose a probabilistic learning framework to assess grasp stability and demonstrate that knowledge about grasp stability can be inferred using information from tactile sensors. Experiments on both simulated and real data are shown. The results indicate that the idea to exploit the learning approach is applicable in realistic scenarios, which opens a number of interesting venues for the future research.

Grasp and motion planning

-

This paper addresses the problem of simultaneously exploring an unknown object to model its shape, using tactile sensors on robotic fingers, while also improving finger placement to optimise grasp stability. In many situations, a robot will have only a partial camera view of the near side of an observed object, for which the far side remains occluded. We show how an initial grasp attempt, based on an initial guess of the overall object shape, yields tactile glances of the far side of the object which enable the shape estimate and consequently the successive grasps to be improved. We propose a grasp exploration approach using a probabilistic representation of shape, based on Gaussian Process Implicit Surfaces. This representation enables initial partial vision data to be augmented with additional data from successive tactile glances. This is combined with a probabilistic estimate of grasp quality to refine grasp configurations. When choosing the next set of finger placements, a bi-objective optimisation method is used to mutually maximise grasp quality and improve shape representation during successive grasp attempts. Experimental results show that the proposed approach yields stable grasp configurations more efficiently than a baseline method, while also yielding an improved shape estimate of the grasped object.

-

This paper shows how a robot arm can follow and grasp moving objects tracked by a vision system, as is needed when a human hands over an object to the robot during collaborative working. While the object is being arbitrarily moved by the human co-worker, a set of likely grasps, generated by a learned grasp planner, are evaluated online to generate a feasible grasp with respect to both: the current configuration of the robot respecting the target grasp; and the constraints of finding a collision-free trajectory to reach that configuration. A task-based cost function enables relaxation of motion-planning constraints, enabling the robot to continue following the object by maintaining its end-effector near to a likely pre-grasp position throughout the object’s motion. We propose a method of dynamic switching between: a local planner, where the hand smoothly tracks the object, maintaining a steady relative pre-grasp pose; and a global planner, which rapidly moves the hand to a new grasp on a completely different part of the object, if the previous graspable part becomes unreachable. Various experiments are conducted using a real collaborative robot and the obtained results are discussed.

-

Numerous grasp planning algorithms have been proposed since the 1980s. The grasping literature has expanded rapidly in recent years, building on greatly improved vision systems and computing power. Methods have been proposed to plan stable grasps on known objects (exact 3D model is available), familiar objects (e.g. exploiting a-priori known grasps for different objects of the same category), or novel object shapes observed during task execution. Few of these methods have ever been compared in a systematic way, and objective performance evaluation of such complex systems remains problematic. Difficulties and confounding factors include different assumptions and amounts of a-priori knowledge in different algorithms; different robots, hands, vision systems and setups in different labs; and different choices or application needs for grasped objects. Also, grasp planning can use different grasp quality metrics (including empirical or theoretical stability measures) or other criteria, e.g., computational speed, or combination of grasps with reachability considerations. While acknowledging and discussing the outstanding difficulties surrounding this complex topic, we propose a methodology for reproducible experiments to compare the performance of a variety of grasp planning algorithms. Our protocol attempts to improve the objectivity with which different grasp planners are compared by minimizing the influence of key components in the grasping pipeline, e.g., vision and pose estimation. The protocol is demonstrated by evaluating two different grasp planners: a state-of-the-art model-free planner and a popular open-source model-based planner. We show results from real-robot experiments with a 7-DoF arm and 2-finger hand, as well as simulation-based evaluations.

-

In this work, we present a novel representation which enables a robot to reason about, transfer and optimize grasps on various objects by representing objects and grasps on them jointly in a common space. In our approach, objects are parametrized using smooth differentiable functions which are obtained from point cloud data via a spectral analysis. We show how, starting with point cloud data of various objects, one can utilize this space consisting of grasps and smooth surfaces in order to continuously deform various surface/grasp configurations with the goal of synthesizing force closed grasps on novel objects. We illustrate the resulting shape space for a collection of real world objects using multidimensional scaling and show that our formulation naturally enables us to use gradient ascent approaches to optimize and simultaneously deform a grasp from a known object towards a novel object.

-

We introduce a framework for robot motion planning based on variational Gaussian processes, which unifies and generalizes various probabilistic-inference-based motion planning algorithms, and connects them with optimization-based planners. Our framework provides a principled and flexible way to incorporate equality-based, inequality-based, and soft motion-planning constraints during end-to-end training, is straightforward to implement, and provides both interval-based and Monte-Carlo-based uncertainty estimates. We conduct experiments using different environments and robots, comparing against baseline approaches based on the feasibility of the planned paths, and obstacle avoidance quality. Results show that our proposed approach yields a good balance between success rates and path quality.

-

This paper presents a novel Learning from Demonstration (LfD) method that uses neural fields to learn new skills efficiently and accurately. It achieves this by utilizing a shared embedding to learn both scene and motion representations in a generative way. Our method smoothly maps each expert demonstration to a scene-motion embedding and learns to model them without requiring hand-crafted task parameters or large datasets. It achieves data efficiency by enforcing scene and motion generation to be smooth with respect to changes in the embedding space. At inference time, our method can retrieve scene-motion embeddings using test time optimization, and generate precise motion trajectories for novel scenes. Experimental results demonstrate that the proposed method outperforms the baseline approaches and generalizes to novel scenes.

-

Model-based reinforcement learning aims to learn a policy to solve a target task by leveraging a learned dynamics model. This approach, paired with principled handling of uncertainty allows for data-efficient policy learning in robotics. However, the physical environment has feasibility and safety constraints that need to be incorporated into the policy before it is safe to execute on a real robot. In this work, we study how to enforce the aforementioned constraints in the context of model-based reinforcement learning with probabilistic dynamics models. In particular, we investigate how trajectories sampled from the learned dynamics model can be used on a real robot, while fulfilling user-specified safety requirements. We present a model-based reinforcement learning approach using Gaussian processes where safety constraints are taken into account without simplifying Gaussian assumptions on the predictive state distributions. We evaluate the proposed approach on different continuous control tasks with varying complexity and demonstrate how our safe trajectory-sampling approach can be directly used on a real robot without violating safety constraints.

Grasp adaptation

-

To perform robust grasping, a multi-fingered robotic hand should be able to adapt its grasping configuration, i.e., how the object is grasped, to maintain the stability of the grasp. Such a change of grasp configuration is called grasp adaptation and it depends on the controller, the employed sensory feedback and the type of uncertainties inherit to the problem. This paper proposes a grasp adaptation strategy to deal with uncertainties about physical properties of objects, such as the object weight and the friction at the contact points. Based on an object-level impedance controller, a grasp stability estimator is first learned in the object frame. Once a grasp is predicted to be unstable by the stability estimator, a grasp adaptation strategy is triggered according to the similarity between the new grasp and the training examples. Experimental results demonstrate that our method improves the grasping performance on novel objects with different physical properties from those used for training.

-

We present a probabilistic model for joint representation of several sensory modalities and action parameters in a robotic grasping scenario. Our non-linear probabilistic latent variable model encodes relationships between grasp-related parameters, learns the importance of features, and expresses confidence in estimates. The model learns associations between stable and unstable grasps that it experiences during an exploration phase. We demonstrate the applicability of the model for estimating grasp stability, correcting grasps, identifying objects based on tactile imprints and predicting tactile imprints from object-relative gripper poses. We performed experiments on a real platform with both known and novel objects, i.e., objects the robot trained with, and previously unseen objects. Grasp correction had a 75% success rate on known objects, and 73% on new objects. We compared our model to a traditional regression model that succeeded in correcting grasps in only 38% of cases.

-

This work addresses the problem of transferring a grasp experience or a demonstration to a novel object that shares shape similarities with objects the robot has previously encountered.

Applications

-

SARAFun, Smart Assembly Robot with Advanced FUNctionalities, H2020

The SARAFun project focused on enabling a non-expert user to integrate a new bi-manual assembly task, such as insertion or folding, through observing the task being performed by a human instructor, on a YuMi robot in less than a day.

-

Towards Advanced Robotic Manipulation for Nuclear Decommissioning: A Pilot Study on Tele-Operation and Autonomy, IEEE International Conference on Robotics and Automation for Humanitarian Applications 2016, the Best Paper Award

Despite enormous remote handling requirements, there has been remarkably little use of robots by the nuclear industry. The few robots deployed have been directly teleoperated in rudimentary ways, with no advanced control methods or autonomy. Most remote handling is still done by an aging workforce of highly skilled experts, using 1960s style mechanical Master-Slave devices. In contrast, this paper explores how novice human operators can rapidly learn to control modern robots to perform basic manipulation tasks; also how autonomous robotics techniques can be used for operator assistance, to increase throughput rates, decrease errors, and enhance safety.

-

We study how to develop a mobile manipulator combining vision and force sensing for achieving everyday manipulation tasks, e.g. door opening.

-

We develop efficient data-driven methods for picking objects of varying shapes, sizes and materials, from clutter.